Note: To learn more about continual learning, check out my blog post: Continual Lifelong Learning with Deep Neural Nets.

Deep neural networks (DNNs) are prone to “forgetting” past knowledge, which has

been coined in the literature as catastrophic forgetting or

catastrophic interference [2, 4]. This limits the DNN’s ability to

perform continual lifelong learning as they face the “stability-plasticity”

dilemma when retaining memories. Stability refers to the preserving of existing

knowledge while, plasticity refers to integrating new knowledge.

Synaptic consolidation and Complementary Learning Systems

(CLS) are two theories that have been proposed in the neuroscience

literature to explain how humans perform continual learning. The first theory

proposes that a proportion of synapses in our neocortex becomes less

plastic to retain information for a longer timescale. The second theory

suggests that two learning systems are present in our brain: 1) the neocortex

slowly learns generalizable structured knowledge 2) the hippocampus performs

rapid learning of new experiences. The experiences stored in the the hippocampus

are consolidated and replayed to the neocortex in the form of episodic memories

to reinforce the synaptic connections.

One of the fundamental premises of neuroscience is Hebbian learning [3], which

suggests that learning and memory in biological neural networks are

attributed to weight plasticity, that is, the modification of the strength of

existing synapses according to variants of Hebb’s rule [7, 8].

Quick Summary

In our paper, we extend

differentiable plasticity [6] to a continual learning setting and develop

a model that is able to adapt quickly to changing environments as well as

consolidating past knowledge by dynamically adjusting the plasticity of

synapses. In the softmax layer, we augment the traditional (slow) weights used

to train DNNs with a set of plastic (fast) weights using Differentiable

Hebbian Plasticity (DHP). This allows the plastic weights to behave as an

auto-associative memory that can rapidly bind deep representations of the data

from the penultimate layer to the class labels. We call this new softmax layer

as the DHP-Softmax. We also show the flexibility of our model by

combining it with popular task-specific synaptic consolidation methods in the

literature such as: elastic weight consolidation (EWC) [4, 9], synaptic

intelligence (SI) [10] and memory aware synapses (MAS) [1]. Our model is

implemented using the PyTorch framework and trained on a single Nvidia Titan V.

Our Model

Each synaptic connection in our model is composed of two weights:

- The slow weights \(\theta \in \mathbb{R}^{m \times d}\), where \(m\) is the number of units in the final hidden layer and \(d\) is the number of classes.

# Initialize fixed (slow changing) weights with He initialization.

self.w = nn.Parameter(torch.Tensor(self.in_features, self.out_features))

nn.init.kaiming_uniform_(self.w, a=math.sqrt(5))

- The Hebbian plastic component of the same cardinality as the slow weights and

made up of two components:

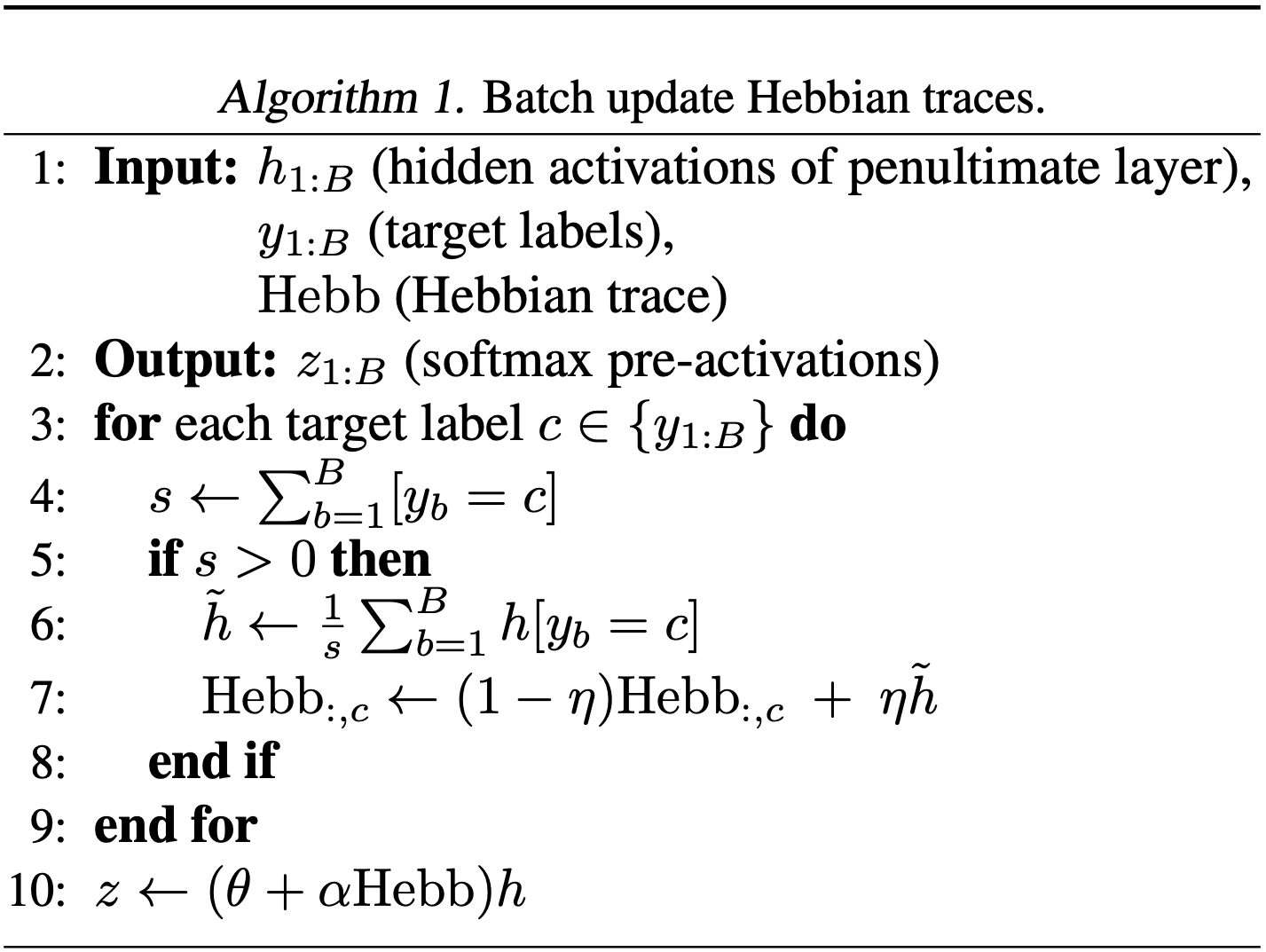

- Hebbian trace, \(\mathrm{Hebb}\) – accumulates the mean hidden activations of the mean activations of the penultimate layer for each target label in the mini-batch during training which are denoted by \(\tilde{h} \in \mathbb{R}^{1 \times m}\) (using Algorithm 1 as shown below).

- Plasticity coefficient, \(\alpha\) – adjusts the magnitude of \(\mathrm{Hebb}\).

# The alpha scaling coefficients of the plastic connections.

self.alpha = nn.Parameter((0.01 * torch.rand(self.in_features, self.out_features)), requires_grad=True)

# The learning rate of plasticity (the same for all plastic connections)

self.eta = nn.Parameter((self.eta_rate * torch.ones(1)), requires_grad=True)

Given the activation of each neuron in $h$ at the pre-synaptic connection $i$,

the unnormalized log probabilities $z$ at the post-synaptic connection $j$ can

be computed as follows:

Then, we apply the softmax function on \(z\) to obtain the desired logits

\(\hat{y}\) thus, \(\hat{y} = \mathrm{softmax}(z)\). The parameters

\(\alpha_{i,j}\) and \(\theta_{i,j}\) are optimized by gradient descent as the

model is trained sequentially on different tasks \(T_{1:nmax}\), where \(nmax\)

is the maximum number of tasks in the respective continual learning benchmarks

we experimented on.

Hebbian Update Rule

The Hebbian traces \(\mathrm{Hebb}\) are updated as follows:

where, \(\eta\) is the Hebbian learning rate that learns how quickly to acquire new

experiences into the plastic component. Here, \(\eta\) also behaves as a decay term to

prevent a positive feedback loop in the \(\mathrm{Hebb}\).

At the start of training the first task $T_{1}$, we initialize \(\mathrm{Hebb}\) to zero:

def initial_zero_hebb(self, device='cuda'):

self.device = device

return Variable(torch.zeros(self.in_features, self.out_features),

requires_grad=False).to(self.device)

Then we update the Hebbian traces in the forward pass using Algorithm 1 (see below). On line 6, we perform the Hebbian update for the corresponding class, \(c\). This hebbian update method forms a compressed episodic memory in the \(\mathrm{Hebb}\) that represents the memory traces for each unique class, \(c\), in the mini-batch. Across the model’s lifetime, we only update \(\mathrm{Hebb}\) during training and during test time, we use the most recent Hebbian traces to perform inference.

\(\hspace{60pt}\)

def forward(self, h, y, hebb):

# Only update Hebbian traces during training.

if self.training:

for _, c in enumerate(torch.unique(y)):

# Get indices of corresponding class, c, in y.

y_c_idx = (y == c).nonzero()

# Count total occurences of corresponding class, c in y.

s = torch.sum(y == c)

if s > 0:

h_bar = torch.div(torch.sum(h[y_c_idx], 0), s.item())

hebb[:,c] = torch.add(torch.mul(torch.sub(1, self.eta),

hebb[:,c].clone()), torch.mul(h_bar, self.eta))

# Compute softmax pre-activations with plastic (fast) weights.

z = torch.mm(h, self.w + torch.mul(self.alpha, hebb))

return z, hebb

Hebbian Update Visualization

An example of a Hebbian update for the active class c = 1 (see Line 4 in Algorithm 1):

\(\hspace{60pt}\)

Updated Quadratic Loss

Following the quadratic loss in the existing work for overcoming catastrophic forgetting such as EWC,

Online EWC, SI and MAS, we regularize the loss, \(\mathcal{L}^{n}(\theta, \alpha, \eta)\), where

\(\Omega_{i,j}\) is an importance measure for each slow weight \(\theta_{i,j}\)

and determines how plastic the connections should be. Here, least plastic

weights can retain memories for a longer period of time whereas, more plastic

weights are considered less important.

where, \(\theta_{i,j}^{n-1}\) are the learned network parameters after training on the previous \(n − 1\) tasks and \(\lambda\) is a hyperparameter for the regularizer to control the amount of forgetting.

We adapt these existing synaptic consolidation approaches to DHP-Softmax and

only compute the \(\Omega_{i,j}\) on the slow weights of the network. The

plastic part of our model can alleviate catastrophic forgetting of learned

classes by optimizing the plasticity of the synaptic connections.

Experiments: SplitMNIST Benchmark

A sequence of \(T_{n=1:5}\) tasks are generated by splitting the original MNIST

training dataset into a sequence of 5 binary classification tasks: \(T_1 =

\{0/1\}\), \(T_2 = \{2/3\}\), \(T_3 = \{4/5\}\), \(T_4 = \{6/7\}\) and \(T_5 =

\{8/9\}\), making the output spaces disjoint between tasks. We trained a

multi-headed MLP network with two hidden layers of 256 ReLU nonlinearities each,

and a cross-entropy loss. We compute the cross-entropy loss,

\(\mathcal{L}(\theta)\), at the softmax output layer for the digits present in

the current task, \(T_n\). We train the network sequentially on all 5 tasks

\(T_{n=1:5}\) with mini-batches of size 64 and optimized using plain SGD with a

fixed learning rate of 0.01 for 10 epochs. More details on experimental setup

and hyperparameter settings can be found in the Appendix section our paper.

\(\hspace{60pt}\)

We observe that DHP Softmax provides a 4.7% improvement on test performance

compared to a finetuned MLP network (refer to the graph above). Also, combining

DHP Softmax with task-specific consolidation consistently improves performance

across all tasks \(T_{n=1:5}\).

Conclusion

We show that catastrophic forgetting can be alleiviated by adding our DHP

Softmax to a DNN. Also, we demonstrate the flexibility and simplicity of our

approach by combining it with Online EWC, SI and MAS to regularize the slow

weights of the network. We do not introduce any additional hyperparameters as

all of the hyperparameters of the plastic component are learned dynamically by

the network during training. Finally, we hope this will foster new progress in

continual learning where, methods involving gradient-optimized Hebbian

plasticity can be used for learning and memory in DNNs.

Future Work

A natural extension of our work would be to apply DHP throughout all of the

layers in a feed forward neural network. Also, the current model relies on

labels to “auto-associate” the classes to deep representations. An interesting

line of work would be to perform the Hebbian update with a self-supervised

representation learning technique. It would also be interesting to see how this

approach will perform in the truly online setting where the network is expected

to maximize forward transfer from a small number of data points to learn new

tasks efficiently in a continual learning environment.

More Details

You can also read our ICML 2019 Adaptive and

Multi-Task Learning: Algorithms & Systems Workshop paper Differentiable

Hebbian Plasticity for Continual Learning.

References

[1] Aljundi, R., Babiloni, F., Elhoseiny, M., Rohrbach, M., and

Tuytelaars, T. Memory aware synapses: Learning what

(not) to forget. In The European Conference on Computer

Vision (ECCV), September 2018.

[2] French, R. Catastrophic forgetting in connectionist networks. Trends in Cognitive Sciences, 3(4):128–135, 1999.

[3] Hebb, D. O. The organization of behavior; a neuropsychological theory. Wiley, Oxford, England, 1949.

[4] Kirkpatrick, J., Pascanu, R., Rabinowitz, N., Veness, J., Desjardins, G., Rusu, A. A., Milan, K., Quan, J., Ramalho,

T., Grabska-Barwinska, A., Hassabis, D., Clopath, C.,

Kumaran, D., and Hadsell, R. Overcoming catastrophic

forgetting in neural networks. Proceedings of the National Academy of Sciences (PNAS), 114(13):3521–3526,

March 2017.

[5] McCloskey, M. and Cohen, N. J. Catastrophic interference in connectionist networks: The sequential learning problem. The Psychology of Learning and Motivation, 24: 104–169, 1989.

[6] Miconi, T., Stanley, K. O., and Clune, J. Differentiable plasticity: training plastic neural networks with backpropagation. In Proceedings of the 35th International Conference

on Machine Learning (ICML), pp. 3556–3565, 2018.

[7] Oja, E. Oja learning rule. Scholarpedia, 3(3):3612, 2008

[8] Paulsen, O. and Sejnowski, T. J. Natural patterns of activity

and long-term synaptic plasticity. Current Opinion in

Neurobiology, 10(2):172 – 180, 2000.

[9] Schwarz, J., Czarnecki, W., Luketina, J., GrabskaBarwinska, A., Teh, Y. W., Pascanu, R., and Hadsell, R.

Progress & compress: A scalable framework for continual

learning. In Proceedings of the 35th International Conference on Machine Learning (ICML), pp. 4535–4544,

2018.

[10] Zenke, F., Poole, B., and Ganguli, S. Continual learning

through synaptic intelligence. In Proceedings of the 34th

International Conference on Machine Learning (ICML),

pp. 3987–3995, 2017.